Using a cloud services have many advantages and one of them is scale or grow infinitely. Having ability to create resources like server, database, etc quickly sometime increase the operational cost of the organisations if not used properly. When people don’t follow the certain guidelines, they start paying high monthly bills for cloud services.

Sharing some tips to not get caught in the trap and how to optimize the cloud spending.

- SCALE ON DEMAND

- RIGHT SIZE RESOURCES

- USE ONLY WHEN NEEDED

- BUY SAVING PLAN OR RESERVED INSTANCES

- USE SPOT INSTANCES

- UPGRADE INSTANCES TO LATEST RELEASES

SCALE ON DEMANDAWS or any cloud service provider gives you option to scale on demand which means you can add more resources like compute, memory, storage etc to your infrastructure as and when needed. There is no need to do capacity planning in advance for 6 months or 1 year of future need and provision the resources. You will end up paying for the resources which you need after 6 months or 1 years in future.

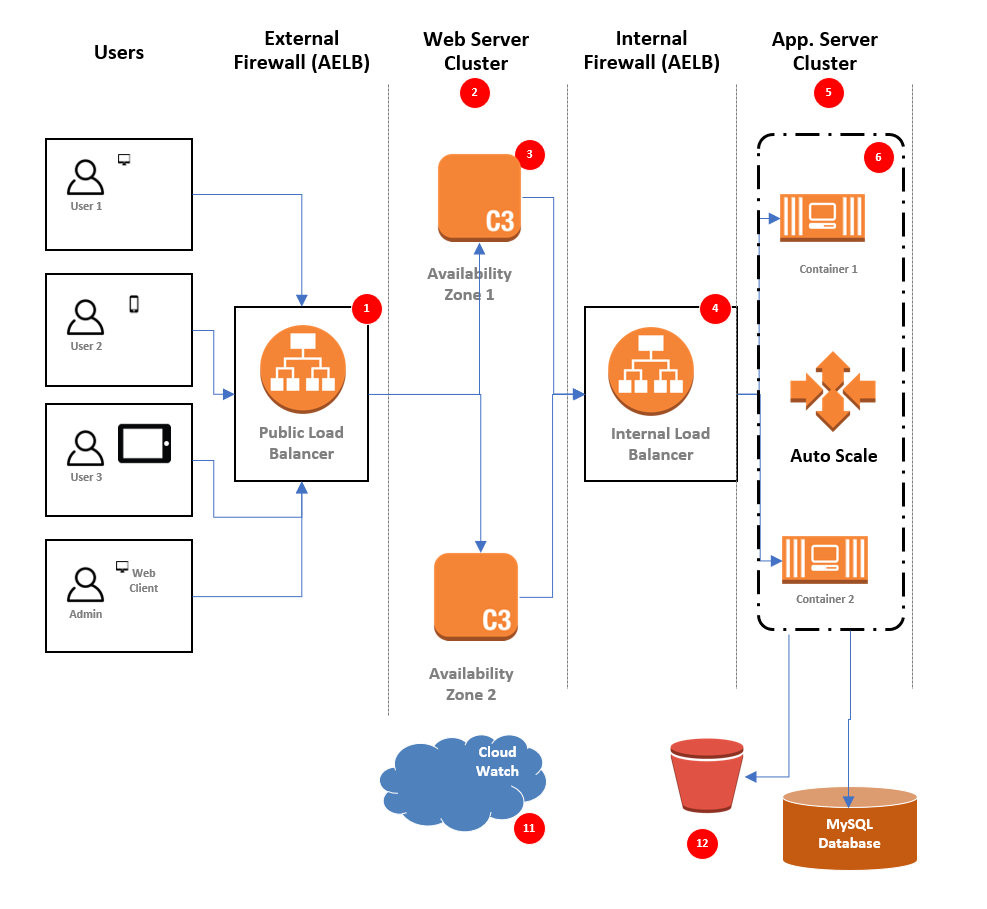

Design your application to be stateless which can scale horizontally. Using Autoscaling feature of EC2 services you can scale on demand. Using containers and container orchestration services like ECS or EKS, Kubernetes makes it simple to scale on demand.

Here is a reference architecture diagram of auto scalable containerized application.

You should monitor your resource metrics like CPU usage, Memory usage, Storage, Network, etc and then scale the resources vertically as needed. For example, lets you start a database server on RDS with a small instance type and after some time your CPU usage is consistently high, you can upgrade the Database RDS to next level. You can use cloud monitoring tools like CloudWatch, Prometheus and Grafana, Datadog, etc to monitor your infrastructure resources.

Bottomline is:

- Don’t overprovision Cloud resources

- Scale horizontal on demand

- Scale Vertically based on usage patterns and when it is indicated by metrics monitor

RIGHT SIZE RESOURCES As the cloud provides you an option to choose any infrastructure resource you want, often people tend to overprovision resources to overcome performance glitches in the code. Performance and Scalability are often mixed and misunderstood terms. I define performance as response time of the system for a single transaction / user. We can take this as benchmark and do load testing and gradually increase number of transactions / users and find out how much load a single server can take. Beyond this you can scale horizontally. Doing a performance testing of each transaction without load to find the benchmark is important and then to handle more users/transactions scale horizontally (or scale-out). Don’t increase the resources to hide the performance bottlenecks of the code.

Bottomline is:

- Provision right size infrastructure resources for your workload.

- Scale up and down as you grow and add more users.

USE ONLY WHEN NEEDED AWS provides web services to manage the infrastructure. You can do all kinds of automations to use the infrastructure resources in the cloud. Using AWS SDK, you can automatically stop and start the resource based on a schedule or on-demand specially in non-production environments. Keeping your development, testing environments running for 24×7 is not required. You can provide your developers and testers a mechanism to stop and start the environments on demand whenever they need and save the cost of AWS. AWS tools like Trusted Advisor, CloudWatch can give you the data about utilization of the AWS resources.

Bottomline is:

- Shutdown resources when not needed

- Schedule automatic shutdown and start based on usage pattern.

- Save around 66% of the non-Productions environments cost by scheduling the Stop and Start of the Cloud resources. (Normally resources are used for 8 hours in a day which is only 33% of the time)

BUY SAVING PLAN OR RESERVED INSTANCES AWS provides discount on the bills if you commit a certain amount of usage of compute. The Saving plan is very flexible than using reserved instance. The reservation can be for 3 years all upfront payment to 1 year no-upfront payment. You can choose the plan based on your need and save around 20% or more on your compute resources like EC2, lambda, and Fargate. You can use recommendation tools available in the AWS Cost Explorer for selecting the right saving plan. You can also reserve RDS instances if you are certain about usage of your RDS instance in future.

Bottomline is:

- Buy Saving plan for Cloud compute resources

- Reserve RDS Instances for Database

USE SPOT INSTANCES AWS provides savings around 90% if you use spot instance of ec2 instead of on-demand. The only drawback is that spot instances can be stopped if your price is less that latest price. You can automate your infrastructure to provision new resources whenever the spot instances are taken away. It is highly recommended to use Spot instances in the non-production environments in your ECS or EKS cluster or autoscaling group. You can mix spot instances with on-demand in production.

- Use On Spot Instances mix with on-demand instances in cluster

UPGRADE INSTANCES TO LATEST RELEASES Always check for latest types in AWS instances and use them. They are not only better in performance but comparatively cheaper as well. You can upgrade the existing resources to latest one by stopping it changing the type.